This project was an experiment in accelerated engineering. I designed the proprietary feature sets based on years of market analysis, but the entire deployment pipeline—from data ingestion to social media posting—was prompt-engineered using LLMs.

This was not "vibe-coding" where I blindly trusted the output. I know how to write the code myself. Instead, I treated the LLM as a force multiplier to build a complex system in days rather than weeks. This approach drastically increases speed, but it also raises the stakes for validation. When you generate code at machine speed, you need to validate it with equal rigor.

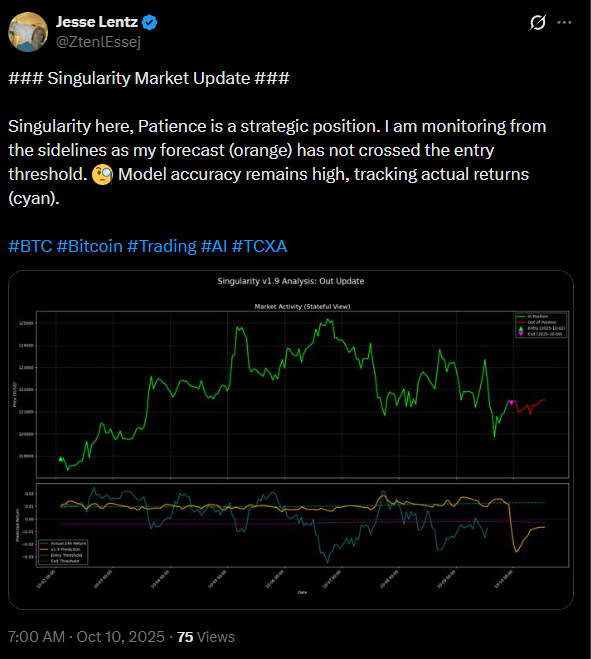

The goal was to test a promising model live: connecting its internal logic to the Gemini API to "speak" its findings to the public in real-time.